Quantifying Code Complexity and Cognition

Table of Contents

- 1. Overview

- 2. Contributions

- 3. The Road to a Topic

- 4. The eyeCode Experiment

- 5. The Nibbles Model

- 6. The Mr. Bits Model

- 7. Threats to Validity

- 8. Conclusions

- 9. Acknowledgments

1 Overview

- Path to dissertation topic

- Dissertation scope

- eyeCode experiment(s)

- Nibbles model

- Mr. Bits model

- Conclusion

2 Contributions

- Framework for quantifying cognitive complexity of code

- eyeCode experiment(s) and data

- eyeCode eye-tracking data analysis library

- Mr. Bits eye-tracking model (ACT-R)

- Nibbles interpretation model (Cognitive Domain Ontology)

3 The Road to a Topic

3.1 Original Concept

- Cognitive trade-offs between general vs. specific code (Veldhuizen, 2007)

// Very specific double sum (double* x, int n) { double s = 0.0; for (int i = 0; i < n; ++i) { s += x[i]; } return s; } // Very general template<typename T, typename Iter, typename Op> T sum(Iter x, Iter end, Op op, T unit) { T s = unit; for (; x != end; ++x) { s = op(s, *x); } return s; }

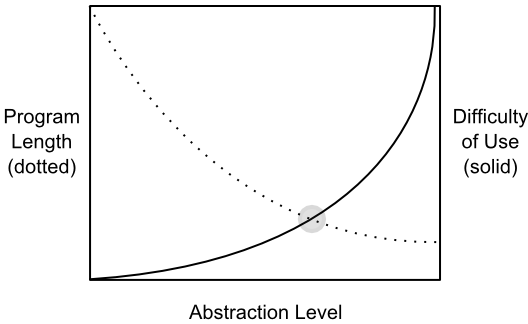

3.2 Abstraction Tradeoffs

- Minimum Description Length principle (Veldhuizen, 2007)

- Minimize the sum of:

- the code length required to implement the component; and

- the code length required to adapt the component to the desired use cases

- Minimize the sum of:

- Code length != cognitive complexity

3.3 Formalizing Code Complexity

- Common metrics: number of tokens, lines, classes, etc.

- Short-term memory 7 +/- 2 chunks (Miller, 1956)

- Always increase with size of code base

- Spatial/graph metrics (assume task)

- Weyuker's Properties:

- Not all programs should have the same complexity

- The set of programs whose complexity is \(c\) is finite

- Some programs share the same complexity

- Functional equivalence does not imply complexity equivalence

- Concatenation cannot decrease complexity

- Context matters for complexity after concatenation

- The order of statements matters

- Identifier and operator names do not matter

- Concatenated programs may be more complex than the 'sum of their parts

3.4 Software Design - Cognitive Aspects

- Françoise Détienne (2001)

"Many claims are made for the efficacy and utility of new approaches to software engineering - structured methodologies, new programming paradigms, new tools, and so on. Evidence to support such claims is thin and such evidence, as there is, is largely anecdotal. Of proper scientific evidence there is remarkably little.

Futhermore, such as there is can be described as 'black box', that is, it demonstrates a correlation between the use of certain technique and an improvement in some aspect of the development. It does not demonstrate how the technique achieves the observed effect."

3.5 Cognitive Complexity of Code

- What makes (some) code hard(er) to understand (than other code)?

- Problem domain

- Programmer expertise (domain, language)

- Code (abstractions, layout, IDE)

- Task (reusing, extending)

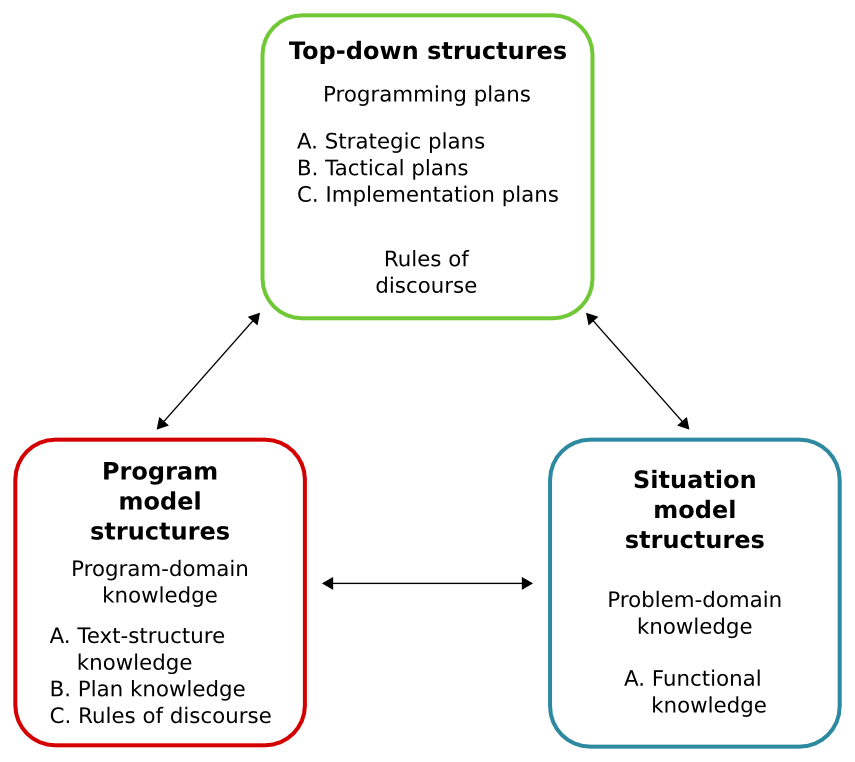

3.6 The Integrated Meta-Model

- von Mayrhauser, 1995

- Common elements from 6 cognitive models of programming

- Code is a structured text document

- Understood via top-down and bottom-up processes

- Plans = schemas/mental templates

3.6.1 The Integrated Meta-Model Details

- "Any of the three submodels may become active at any time during the comprehension process."

- Qualitative model - not enough detail!

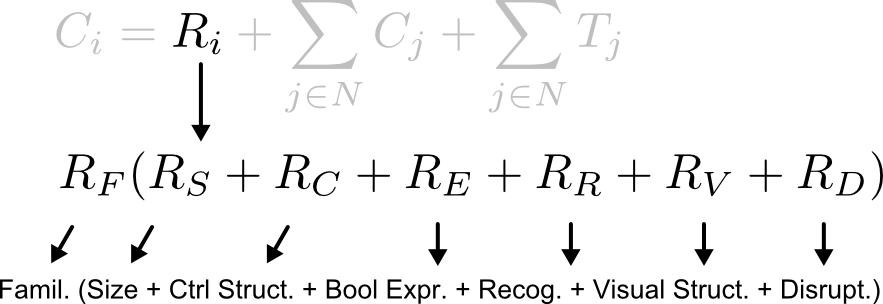

3.7 The Cognitive Complexity Metric

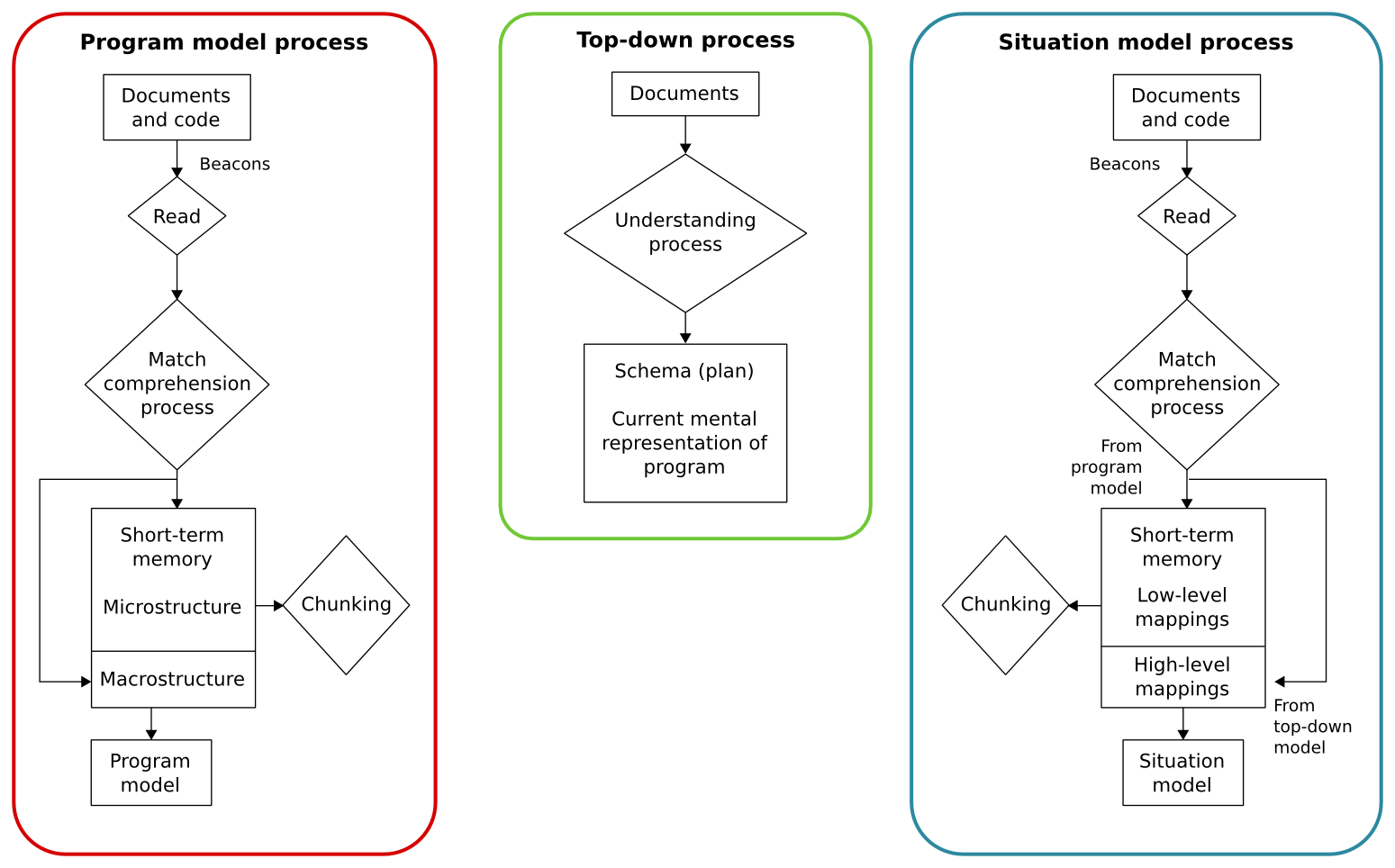

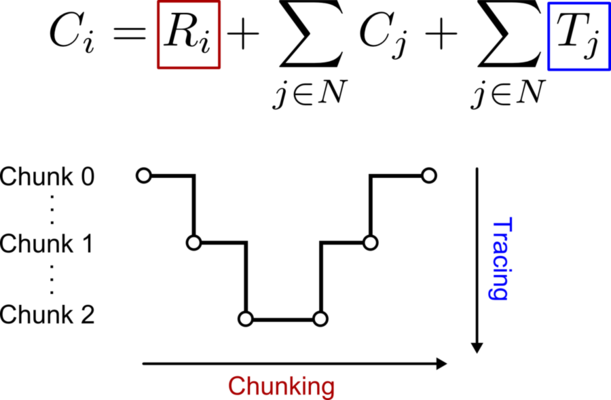

- Process model of code comprehension (Cant, 1995)

- Complexity = Chunking (code) + Tracing (dependencies)

3.7.1 Chunking (CCM)

- Internalizing code snippets that "necessarily go together"

- Faster for familiar, schematic chunks

3.7.2 Tracing (CCM)

- Locating chunk dependencies

- Faster for closer, more familiar dependencies

3.8 Dissertation Scope

- Quantitative Cognitive Model of a programmer

- Basis for definition of cognitive complexity

- End-to-end model ideal (code to keystrokes)

- Should predict performance and errors

- Goal: rank two programs by their cognitive complexity

- Programs must solve the same problem

- Same language (sub-set of Python 2)

- Model and programmer share same task (output prediction)

3.9 A Tale of Two Models

- Mr. Bits (ACT-R cognitive architecture)

- End-to-end model, no programmer errors

- Based on eyeCode eye-tracking experiment data (29 ppl)

- Predicts custom performance metrics

- Nibbles (Cognitive Domain Ontology, constraint solver)

- Code to "mental model" representation

- Based on eyeCode Mechanical Turk experiment data (133 + 29 ppl)

- Predicts interpretation errors

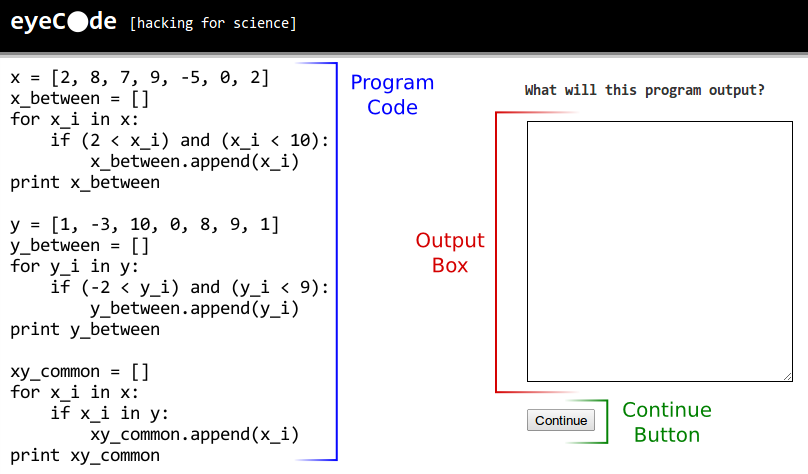

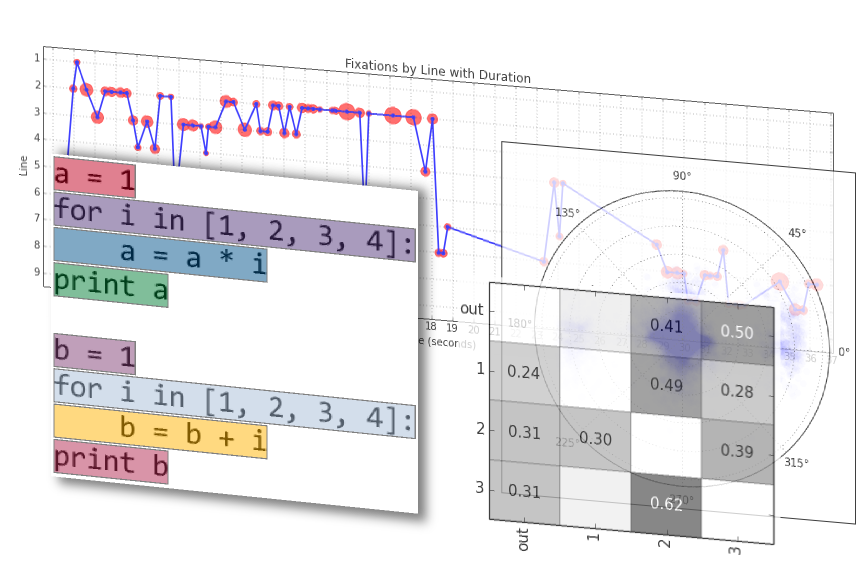

4 The eyeCode Experiment

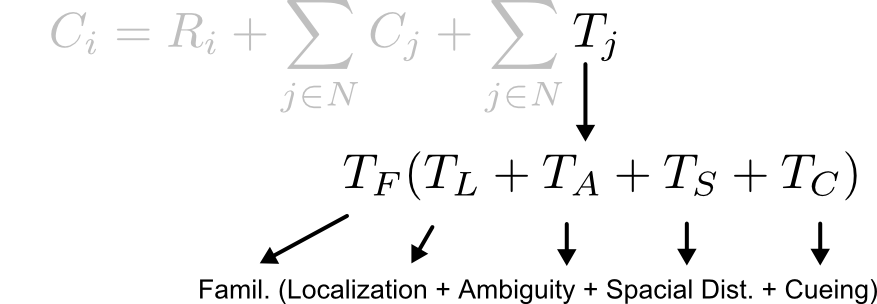

4.1 Experiment Format

- Programmers predict printed output of 10 short Python programs

- No external libraries, syntax highlighting, or feedback

- Multiple versions of each program (randomly assigned)

4.2 Demographics

- 162 participants (29 local, eye-tracker)

- 129 male, 30 female, 3 unreported

- 28.4 years mean age

- 6.9 years mean programming experience

- 2.0 mean years Python experience

- 52.5% with a Computer Science degree

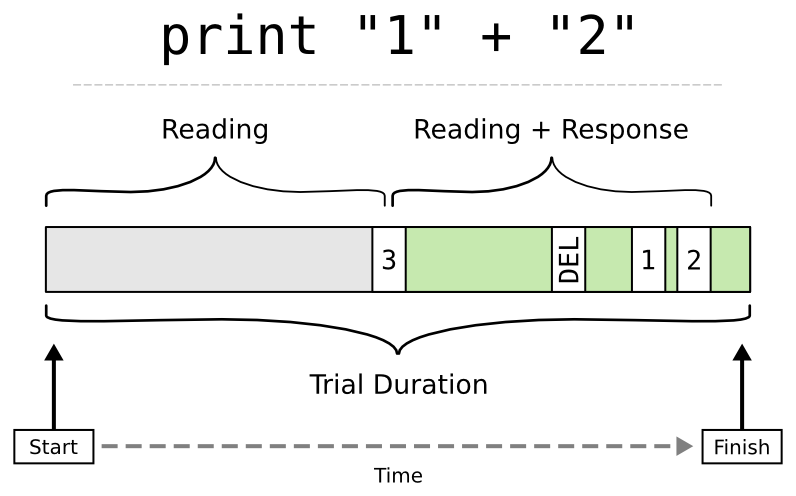

4.3 Performance Metrics

- Output distance

- String-edit distance between actual and true response

- Trial duration

- Keystroke coefficient

- # of actual keystrokes / # of required keystrokes

- Response proportion

- Time responding / trial duration

- Response corrections

- # of times response shrunk (then grew)

4.4 Quantifying Errors

- Correct vs. Incorrect

- Participant response matches true output, w/o formatting

1 2 3same as[1, 2, 3]- 75% correct trials, 64% perfect trials

- Other performance metrics can predict correctness

- Response corrections and keystoke coefficient

- Extra time responding = bad

4.5 Most Common Errors

- counting twospaces (only 1 "Done counting")

for i in [1, 2, 3, 4]: print "The count is", i print "Done counting"

- scope (22 instead of 4)

def add_1(num): num = num + 1 def twice(num): num = num * 2 added = 4 add_1(added) twice(added) add_1(added) twice(added) print added

5 The Nibbles Model

5.1 Overview

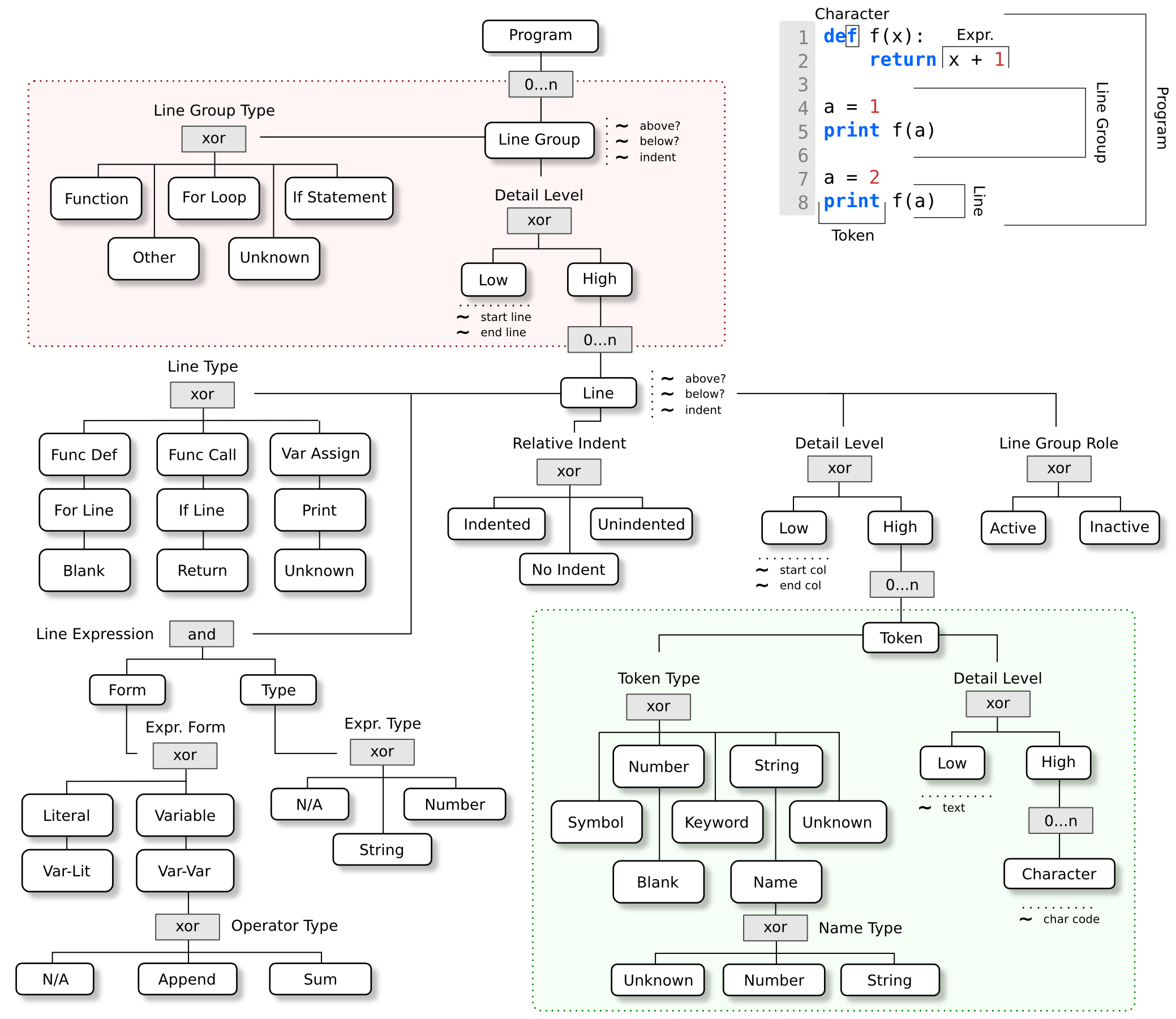

- Program is represented as a Cognitive Domain Ontology (CDO)

- Hierarchical domain representation

- All possible programs

- Observed code constrains CDO

- Limits interpretation of unobserved code

- May change interpretation of previously observed code

- Constraint solver enumerates possible interpretations

- Constraints prune non-sensical programs

- First interpretation considered "best"

5.2 Python Language

- Basic subset of Python 2

- Missing "if"

- Program > Line Group > Line > Token > Character

- All levels have high/low detail, Unknown type

- Tokens

- Symbol, Keyword, Name, Number, Text

- Transaction Role (Input, Output, Action)

- Target Type (Constant, Mutable)

- Lines

- Assignment, Print, For-Loop, Function-Call, Function-Def, Return

- Relative indent to line above

- Right-Hand Side (RHS) expression form and type

- y = x + 5; y : Number, x + 5 : Sum

- Line Groups

- Function, For-Loop, Generic

- All lines belong to 1 line group (contiguous)

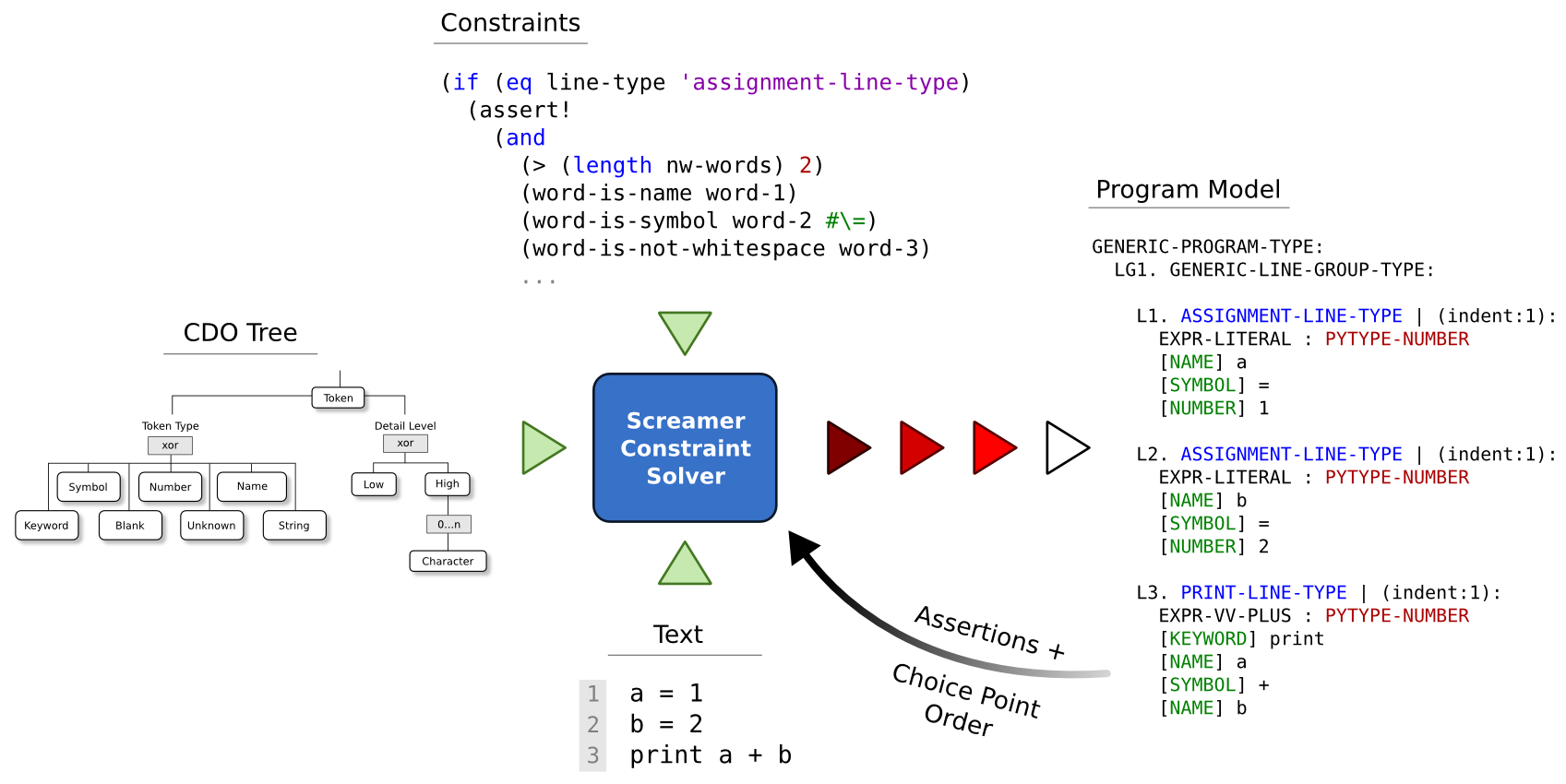

5.3 Code Inference

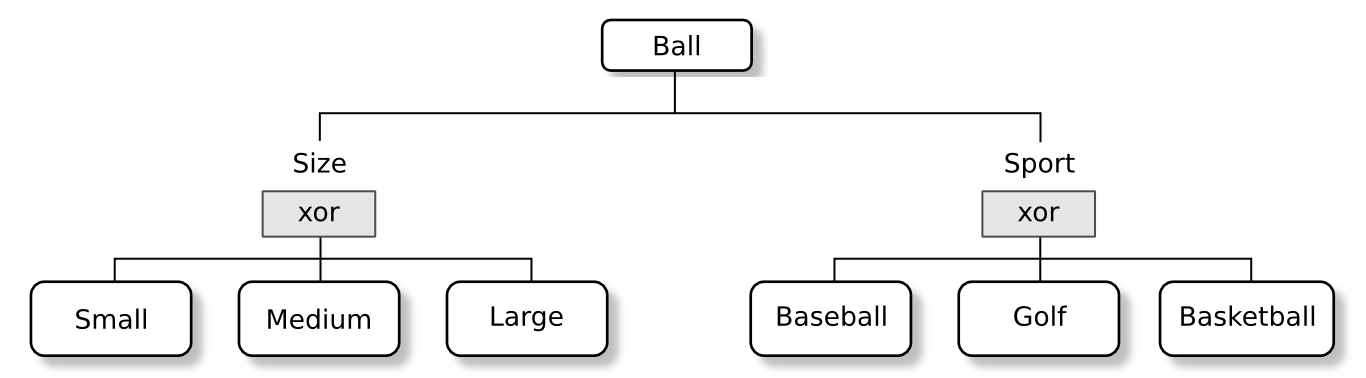

5.4 Cognitive Domain Ontologies

- Entities + Relations = CDO Tree

- Entity variables (e.g., height, name)

- Constraints eliminate solutions

- 3 Relations

- Sub-Parts (and)

- Choice-Point (xor)

- Instances (0..n)

5.5 The Screamer Constraint Solver

- Screamer/Screamer+ Common LISP libraries

- Non-deterministic (either), (fail) forms

- Chronological back-tracking (depth-first, continuations)

5.5.1 LISP Example

(all-values (let ((x (either 'a 'b 'c)) (y (either 1 2 3))) ;; Exclude (B 2) (if (and (eq x 'b) (eq y 2)) (fail)) (list x y))) ;; ((A 1) (A 2) (A 3) (B 1) (B 3) (C 1) (C 2) (C 3))

5.6 Ball Example CDO

5.6.1 Ball Example Constraints

- Unconstrained

- 3x3 choices = 9 solutions: Small-Golf, Small-Baseball, etc.

- Constrained

- Small \(\iff\) Golf, Medium \(\iff\) Baseball, Large \(\iff\) Basketball

- 3 solutions: Small-Golf, Medium-Baseball, Large-Basketball

5.7 Choice Points

- Source of non-determinism

- Solution space size growth

- Linear in number of choices at top level

- Exponential under an Instances (if order matters)

- Classify, group membership, contract/order solution space

5.8 First and Second Order Constraints

- First Order

- Choice points, sub-parts

- 1st-order logic (and, or, not, \(\iff\), etc.)

- Second Order

- Instances, choice points, sub-parts

- 2nd-order logic (every, at-least, all-different, etc.)

- Constraints as arguments

5.9 The Nibbles CDO

5.9.1 Domain Structure

5.9.2 Constraint Example

- Assignment Line (x = y + 1)

- More than 2 tokens

- 1st token is a Name

- 2nd token is '=' Symbol

- 3rd token is not Whitespace

- RHS expression form is not N/A

- …

- … many more

5.9.3 Information Flow

5.10 Example 1

- counting twospaces

for i in [1, 2, 3, 4]: print "The count is", i print "Done counting"

- Error: only 1 "Done counting"

5.10.1 Mechanism

"One possible mechanism for this error is if vertical whitespace primes disjoint grouping of lines – i.e., a participant is more likely to break code lines into different groups when they are separated by enough whitespace." - Hansen, 2015

- Priming Mechanism

- All lines initially in all line groups

- From top to bottom, lines are likely active in group X

- At whitespace threshold, lines become likely active in group X + 1

- Relative indent information overrides priming

5.10.2 Mental Models

- Correct Mental Model

- All lines are in the same line group

- Last line is inline relative to previous line

LG1. FOR-LOOP-LINE-GROUP-TYPE: L1. FOR-LOOP-LINE-TYPE | (indent:1): [KEYWORD-TYPE:3] for ... L2. PRINT-LINE-TYPE > (indent:2): EXPR-LITERAL : PYTYPE-STRING [KEYWORD-TYPE:5] print ... L3. PRINT-LINE-TYPE | (indent:2): EXPR-LITERAL : PYTYPE-STRING [KEYWORD-TYPE:5] print ... - Incorrect Mental Model

- Last line is in a separate line group

- Last line is unindented relative to previous line

LG1. FOR-LOOP-LINE-GROUP-TYPE: L1. FOR-LOOP-LINE-TYPE | (indent:1): [KEYWORD-TYPE:3] for ... L2. PRINT-LINE-TYPE > (indent:2): EXPR-LITERAL : PYTYPE-STRING [KEYWORD-TYPE:5] print ... LG2. GENERIC-LINE-GROUP-TYPE: L3. PRINT-LINE-TYPE < (indent:1): EXPR-LITERAL : PYTYPE-STRING [KEYWORD-TYPE:5] print ...

5.11 Example 2

- overload plusmixed

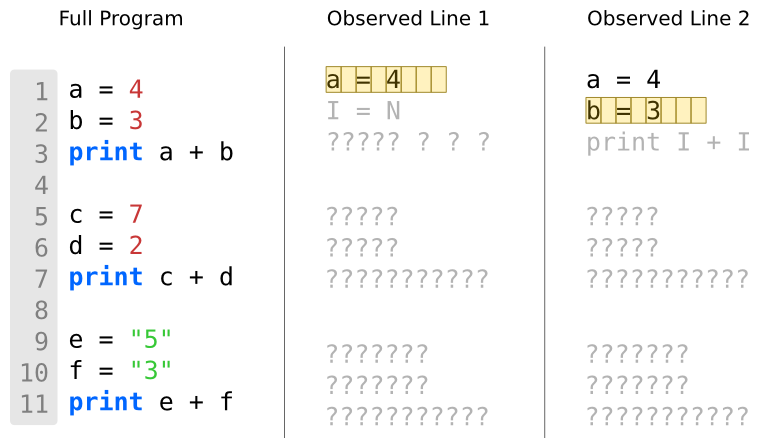

a = 4 b = 3 print a + b c = 7 d = 2 print c + d e = "5" f = "3" print e + f

- Error: Final line prints 8 instead of "53"

5.11.1 Mechanism

- Summation instead of concatenation

- Assume that '+' converts strings to numbers?

- Read "5" and "3" as numbers because of '+' priming

- Relax RHS expression type constraint for assignment

- e = "5"; e : String

- Numeric type assumed if RHS has at least 1 Number token

- Instead of exactly 1 Number token

- Error occurs if sum '+' is primed over append '+'

- Solution with

eandfas numbers comes first - Correct solution possible only if types are correctly asserted

- Solution with

5.11.2 Mental Models

- Correct Mental Model

eandfare strings+is interpreted as string appendL1. PRINT-LINE-TYPE | (indent:1): EXPR-VV-PLUS : PYTYPE-STRING (PLUS-APPEND) [KEYWORD-TYPE:5] print [NAME-WORD-TYPE:1] e (PYTYPE-STRING) [SYMBOL-WORD-TYPE:1] + [NAME-WORD-TYPE:1] f (PYTYPE-STRING)

- Incorrect Mental Model

eandfare integers+is interpreted as numeric sumL1. PRINT-LINE-TYPE | (indent:1): EXPR-VV-PLUS : PYTYPE-NUMBER (PLUS-SUM) [KEYWORD-TYPE:5] print [NAME-WORD-TYPE:1] e (PYTYPE-NUMBER) [SYMBOL-WORD-TYPE:1] + [NAME-WORD-TYPE:1] f (PYTYPE-NUMBER)

5.12 Example 3

- scope diffname

def add_1(num): num = num + 1 def twice(num): num = num * 2 added = 4 add_1(added) twice(added) add_1(added) twice(added) print added

- Error: Prints 22 instead of 4

5.12.1 Mechanism

- Rule of Discourse violation

- "Don't include code that won't be used" (Soloway, 1984)

- Transaction Mechanism

- Smallest piece of knowledge used in program understanding (Mayer, 1987)

- What operation takes place (action)

- Where it takes place (location)

- What object is acted upon (object)

- Transaction (Line)

- Inputs, Outputs, Actions (Tokens)

- Complete if at least one of each type

- Primed for Complete transactions (code that "does something")

- Smallest piece of knowledge used in program understanding (Mayer, 1987)

5.12.2 Transaction Extension

- x = y + 1

- +, = are Actions

- y, 1 are Inputs

- x is an Output (Assign)

- f(x)

- f is an Action

- x is both Input and Output (Modify)

- CDO extension

- Transaction role on Token

- Output type

5.12.3 Target Extension

- f(x)

- f is an Action

- x is both Input and Output (Modify)

- x is not a constant

- CDO extension

- Target type for Name Tokens

5.12.4 Mental Models

- Incorrect Mental Model

- Last line is a complete transaction

numis both input and output

LG1. GENERIC-LINE-GROUP-TYPE: L7. ASSIGNMENT-LINE-TYPE | TX (indent:1): num : PYTYPE-NUMBER EXPR-LITERAL : PYTYPE-NUMBER [NAME-WORD-TYPE:1] num (PYTYPE-NUMBER) O= [SYMBOL-WORD-TYPE:1] = A [NUMBER-WORD-TYPE:1] 4 I L8. FUN-CALL-LINE-TYPE | TX (indent:1): [NAME-WORD-TYPE:5] add_1 A [SYMBOL-WORD-TYPE:1] ( [NAME-WORD-TYPE:5] num (PYTYPE-NUMBER) I O! [SYMBOL-WORD-TYPE:1] ) - Correct Mental Model

numis marked as a constant (line 7)- Additional constraint

- A Name that targets a Constant cannot have a Modify Output Type

- Line 8 cannot be a complete transaction

LG1. GENERIC-LINE-GROUP-TYPE: L7. ASSIGNMENT-LINE-TYPE | TX (indent:1): num : PYTYPE-NUMBER EXPR-LITERAL : PYTYPE-NUMBER [NAME-WORD-TYPE:1] num (PYTYPE-NUMBER, TARGET-CONSTANT) O= [SYMBOL-WORD-TYPE:1] = A [NUMBER-WORD-TYPE:1] 4 I L8. FUN-CALL-LINE-TYPE | (indent:1): [NAME-WORD-TYPE:5] add_1 A [SYMBOL-WORD-TYPE:1] ( [NAME-WORD-TYPE:5] num (PYTYPE-NUMBER, TARGET-CONSTANT) I [SYMBOL-WORD-TYPE:1] )

5.13 Conclusions

- Capabilities

- Code to mental model with errors

- Short-term learning via choice-point reordering

- Form of attention with Unknown choices

- Limitations

- Single file programs, line-by-line

- Accumulated knowledge cannot be discarded/re-evaluated

- Hand-built constraints for specific examples

6 The Mr. Bits Model

6.1 Overview

- Operationalizes parts of the Cognitive Complexity Metric (Cant, 1995)

- ACT-R Cognitive Architecture

- Predicts fixations and keystrokes in the eyeCode experiment

- Assumes no errors are made

6.2 Information Flow

- Raw text to fixation/keystroke timings

- ACT-R script automatically generated and executed

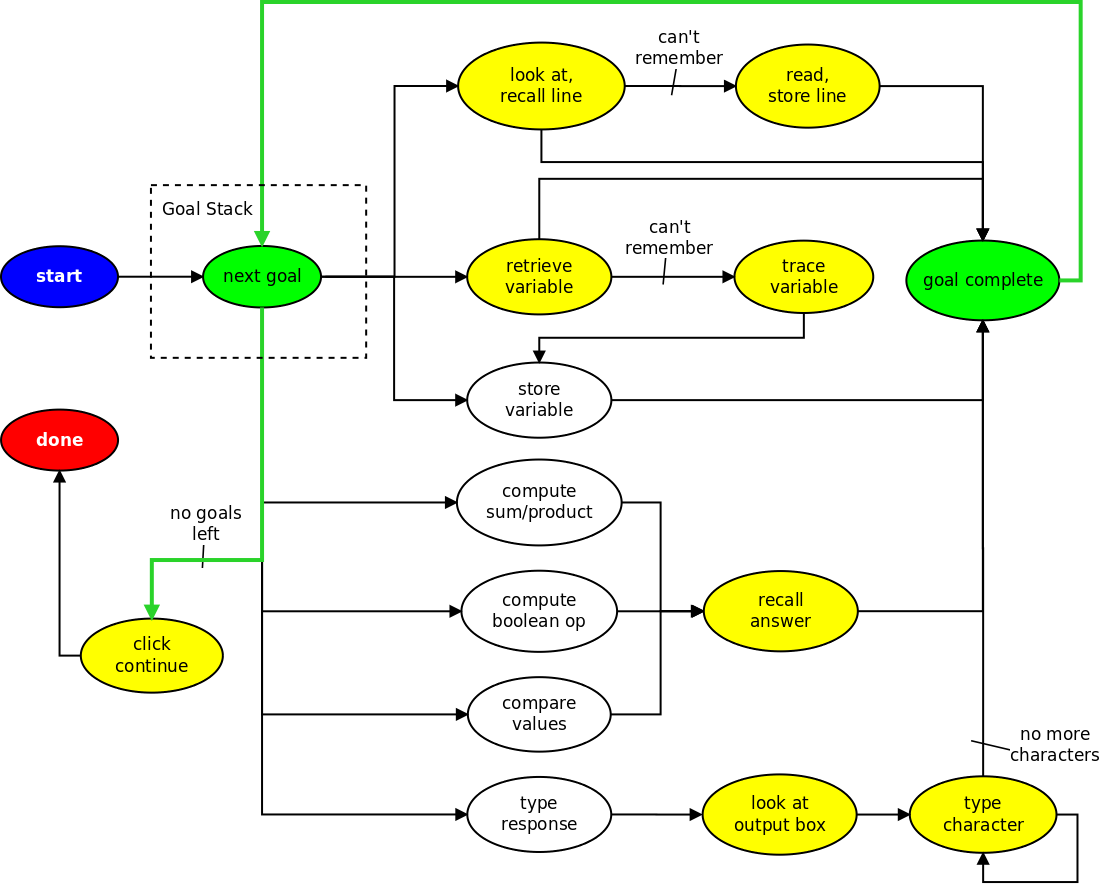

6.3 Goal Stack

- Generated using Python debugger before execution

- Moving to/looking at lines, tokens

- Remember/recall variables, values, locations

- Evalulate expressions

- Respond via keystrokes

- Model runs until goal stack is empty

6.4 Model States

6.5 Goals

| Goal | ACT-R Module(s) | Description |

|---|---|---|

| Go to line | Visual/DM | Moves the virtual eyes to the first character of a line. If the details of the line cannot be retrieved from memory, every token is read. |

| Remember line | DM | Stores the details of the current line in memory. |

| Recall variable | Visual/DM | Attempts to retrieve the value of a variable from memory. Failure results in a retrieval of the variable's location, a visual trace to it, and storage of the result in memory. |

| Store variable | DM | Stores a variable's value in memory. |

| Compute sum/product | DM | Retrieves a sum or product from memory. |

| Compare numbers | DM | Retrieves a numeric relationship (less/greater than) from memory. |

| Evaluate Boolean expression | DM | Retrieves the result of a boolean expression (AND/OR) from memory. |

| Fixate output box | Visual | Locates the output box on the screen and fixates the upper-left corner. |

| Type response | Manual | Types a text response, character by character. |

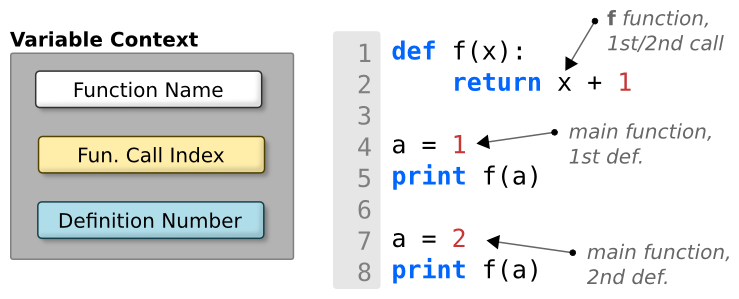

6.6 Variables and Context

- Variables are identified by

- Current function (or global context)

- # of times function is called

- Line (definition) number

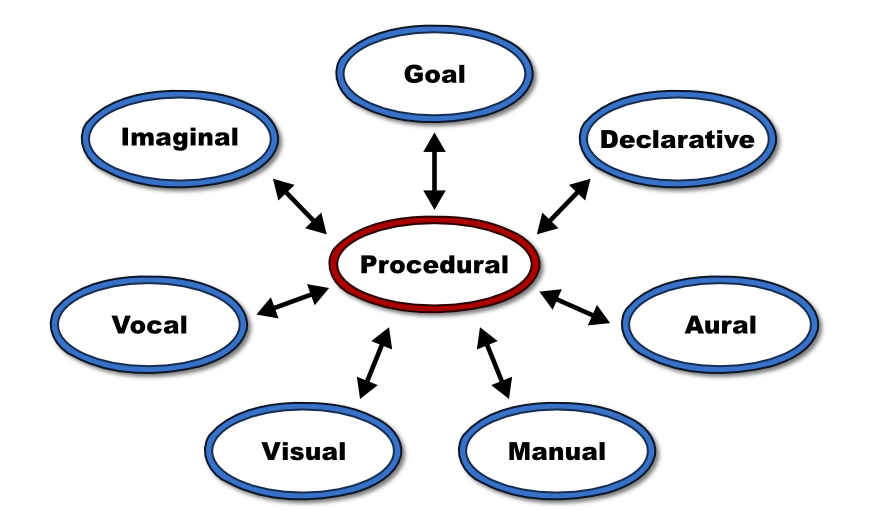

6.7 The ACT-R Cognitive Architecture

6.7.1 Modules

- Production system (procedural module) is communications bottleneck

- Declarative = short/long-term memory

- Imaginal = current problem state

- Vocal/Aural/Manual = speak/hear/type+click

6.7.2 Buffers, Chunks, and Productions

- Modules communicate using buffers

- Buffers contain chunks

- Chunks are collections of key/value pairs

- Productions match existing chunks in buffers (LHS), and produce new chunks in buffers (RHS)

6.7.3 The Subsymbolic Layer

- Modules have symbolic interface, sub-symbolic behavior

- Same symbolic action may take a variable amount of time

- Declarative Memory (DM)

- Retrieval times are based on recency and frequency of chunk use

- Visual

- Time to shift attention depends on current position + noise (EMMA)

- Manual

- Time to press key depends on current "hand" position

6.7.4 ACT-R Models

- Written/executed in Common LISP

;; Defines a production that matches chunks in the Goal/Visual buffers, ;; and produces a new chunk in the Imaginal buffer. (p encode-letter =goal> isa read-letters state attend =visual> isa text value =letter1 ?imaginal> buffer empty ==> =goal> state wait +imaginal> isa array letter1 =letter1 )

6.8 Mr. Bits and ACT-R

- Visual

- Saccades take time based on distance

- EMMA model produces noisy fixations

- Declarative Memory (DM)

- Stores current context (function, variable values)

- Sum/product/boolean knowledge

- Manual

- Fitts' Law-based keystroke timings

- Parmeters adjusted for faster typing speeds

6.9 Sub-Symbolic Calculations

- Sums/Products/Boolean Facts

- Stored in declarative memory

- Recency/frequency retrieval effects

- \((2 \times 2) + (2 \times 2)\) faster than \((2 \times 2) + (2 \times 3)\)

- Lists

- Humans do not remember complete lists (eyeCode)

- Remember Lists (RL) and Forget Lists (FL) versions of model

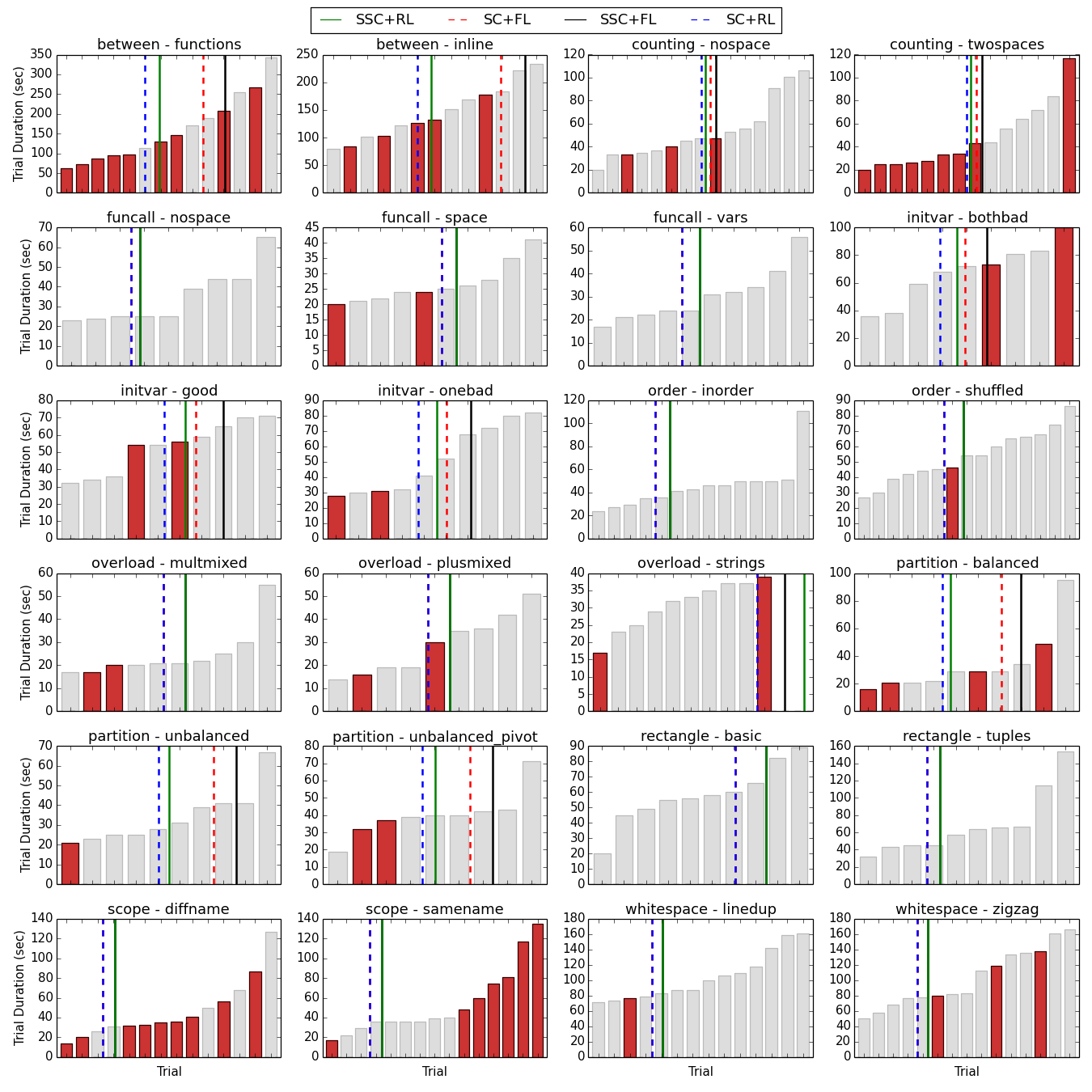

6.10 Results

6.10.1 Trial Times

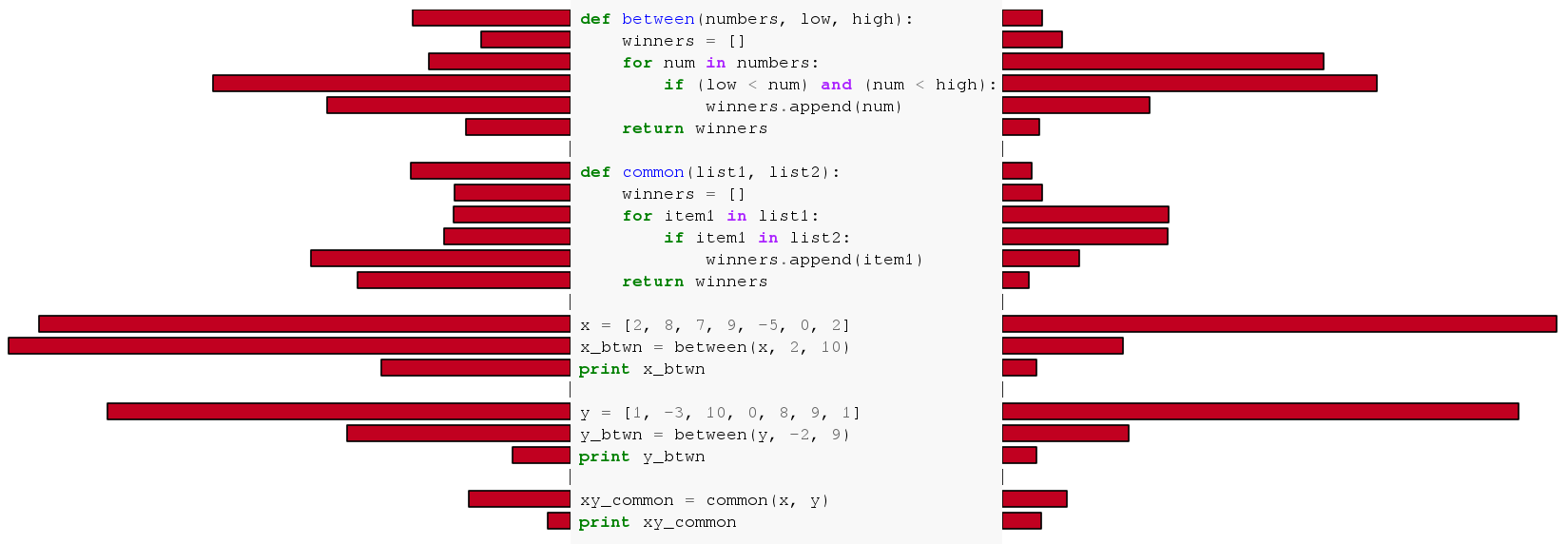

- Mr. Bits trial times (lines) vs. eyeCode participants (bars)

- Red bars = trials with errors

- SC = Symbolic, SSC = Sub-Symbolic

- RL = Remember Lists, FL = Forget Lists

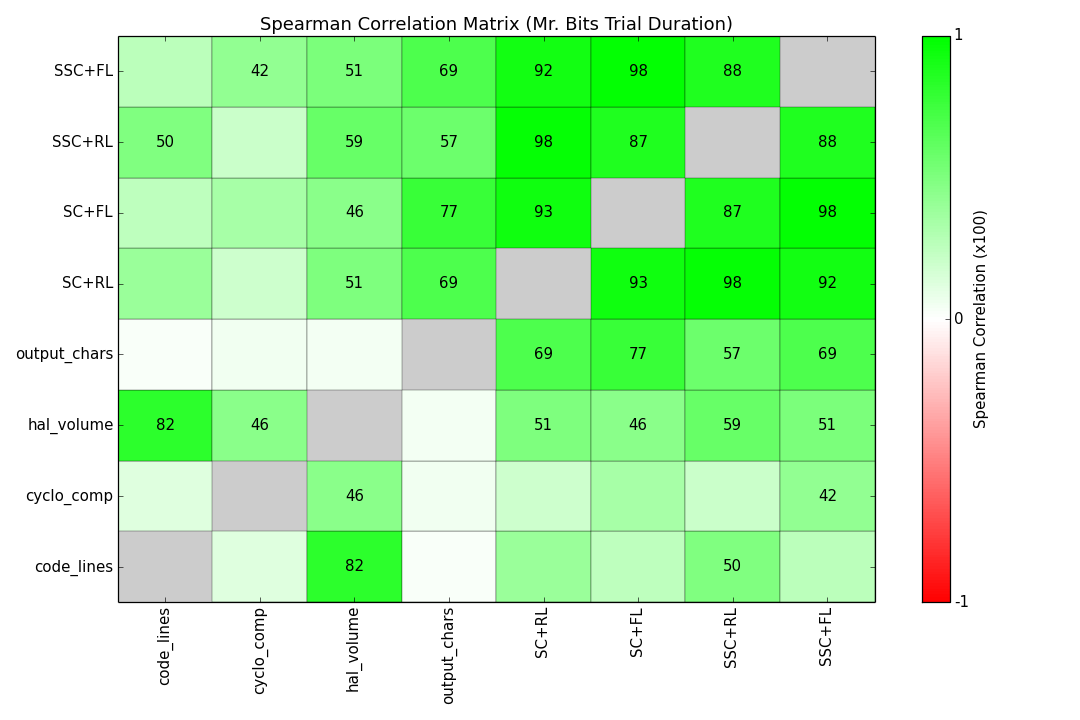

6.10.2 Complexity Metric Comparison

- Mr. Bits trial times vs. complexity metrics

- SC = Symbolic, SSC = Sub-Symbolic

- RL = Remember Lists, FL = Forget Lists

- SSC + FL moderately correlated with most metrics

6.10.3 Relative Line Times

- Relative time spent on each line over entire trial

Human (left) vs. Mr. Bits (right)

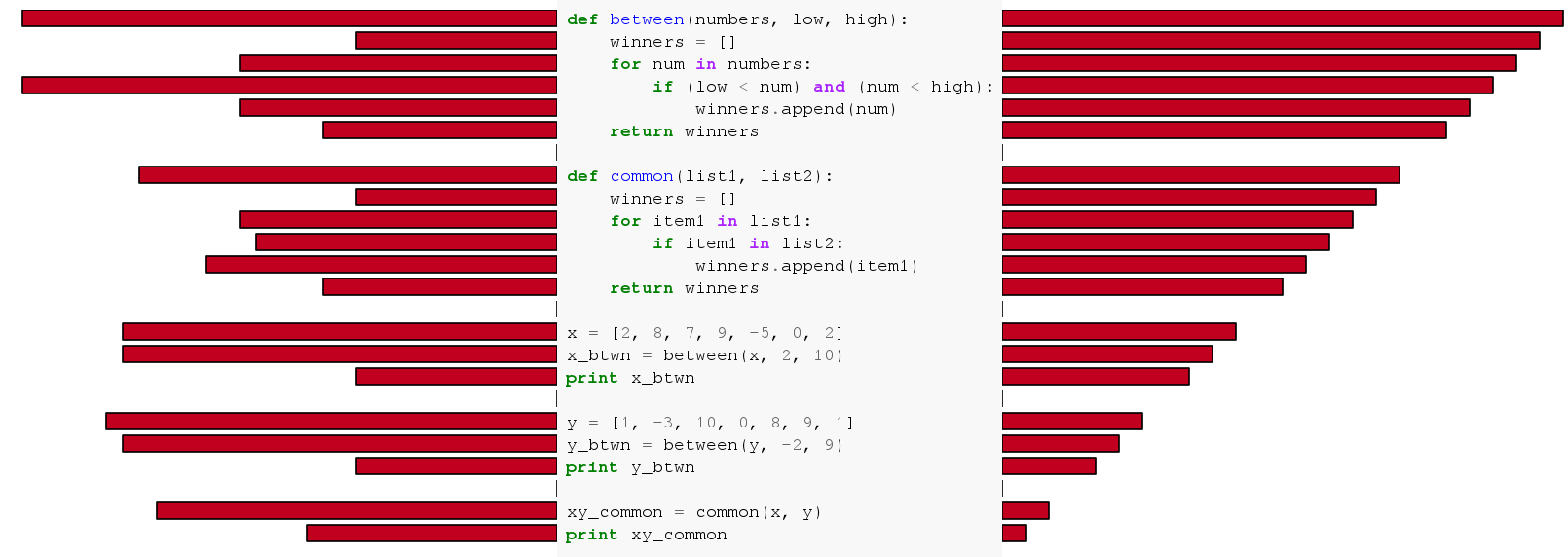

6.10.4 Null Models

- Relative line times based on line length/number

Line length (left) vs. Line number (right)

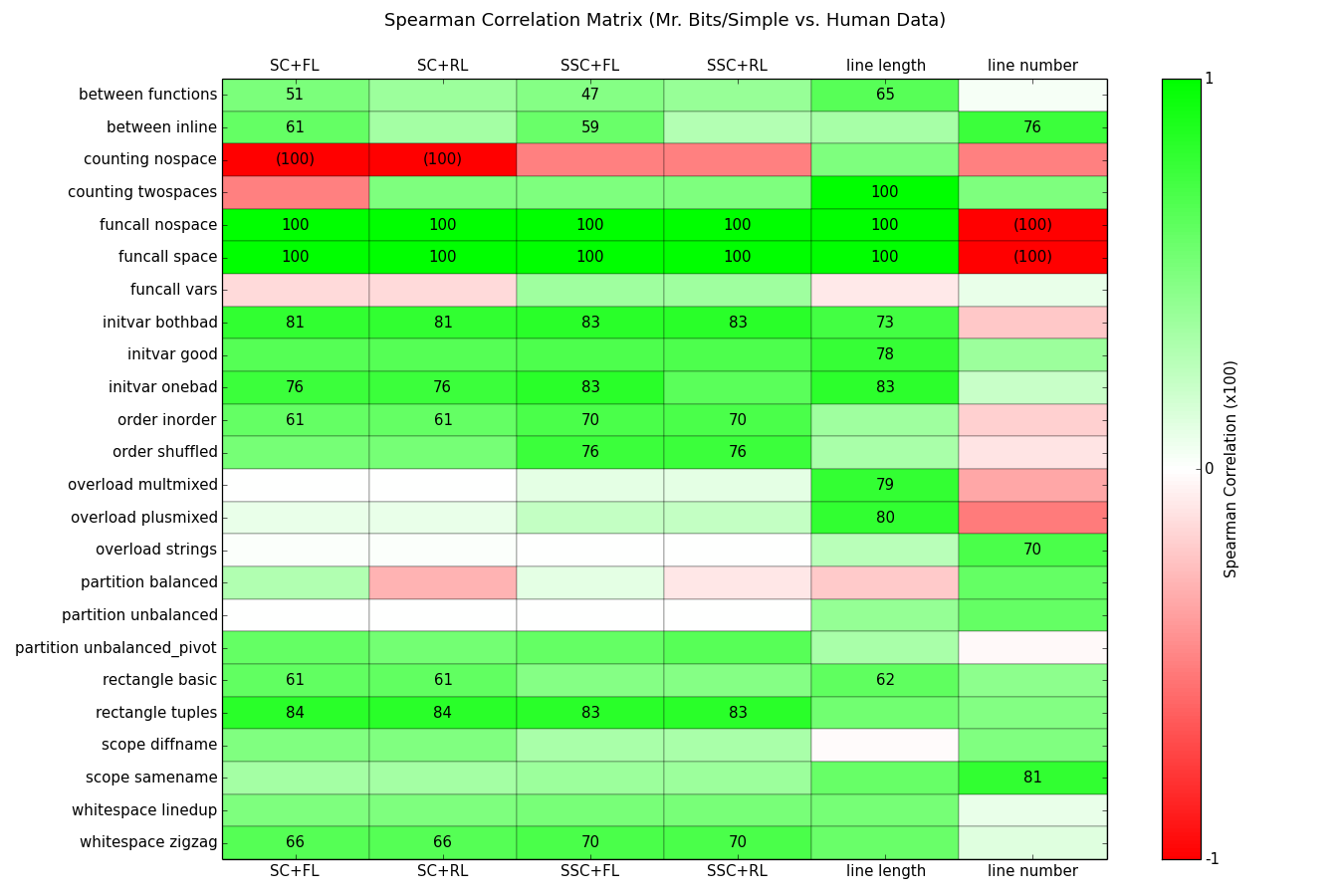

6.10.5 Model Comparison

- Relative line time correlations for all programs

- SC = Symbolic, SSC = Sub-Symbolic

- RL = Remember Lists, FL = Forget Lists

- Best: SSC + FL and line length

6.11 The eyeCode Library

- Python library for eye-tracking analysis/visualization

- Features

- Automatic areas of interest (AOIs) for any Pygments language

- Multi-layered hit-testing, scanpaths, transition matrices

- Static and moving time window metrics

6.12 Conclusion (Mr. Bits)

- Successfully operationalizes parts of Cognitive Complexity Metric (Cant, 1995)

- Chunking (DM)

- Tracing (Visual)

- Comparison with human data

- Strong correlation with relative line times (SSC + FL)

- Average trial times

- Limitations

- Assumes no errors

- Python debugger used to generate goals

- Single file, small programs

- Future Work

- Compare AOI transitions, moving window metrics

- Merge with Nibbles model

7 Threats to Validity

- eyeCode Experiment

- Sampling/technical assumptions from Mechanical Turk

- Tobii eye-tracker calibration/errors

- Looking at = attending to (attention follows gaze)

- Nibbles Model

- Minimal literature on domain knowledge mining as CSP

- Hand-built constraints and structure

- First solution = only/best solution

- Mr. Bits Model

- Abstraction level of CCM and ACT-R

- Dozens of productions (inexplicable model)

- Hand-tuned parameters

8 Conclusions

8.1 Rank two programs by their cognitive complexity

- Mr. Bits trial time

- Overlap with existing complexity metrics

- Sensitive to code layout, reuse, identifier names

- Alternative tasks (besides output prediction)?

- Nibbles solution space

- Constraints and choice point orderings = experience/expectations

- "Cognitive fuzzing" to explore possible errors?

- Time to solution ~ cognitive complexity?

8.2 Quantiative cognitive model of a programmer

- Mr. Bits

- Low-level predictions, medium-level concepts (lines, variables)

- More flexible than GOMS approach

- Visual attention, forgetting, disambiguation

- Nibbles

- Medium-level predictions, higher-level concepts (line groups, program)

- Errors with an explanation

- Visual/domain attention, code abduction, learning

- Both models can be executed and tested empirically

9 Acknowledgments

- Research Committee

- Dr. Andrew Lumsdaine

- Dr. Robert Goldstone

- Dr. Raquel Hill

- Dr. Chen Yu

- Dr. Scott Douglass (AFRL)